The DARPA Intrinsic Cognitive Security (ICS) program is studying the feasibility of applying formal methods to the cognitive modeling domain to verify that operators of tactical mixed-reality (MR) systems are protected from adversarial cognitive attacks. This new class of attack exploits the intimate connection between users and MR equipment. Traditional methods used in current DoD systems to address cybersecurity threats do not address user cognitive effects. Establishment of protection guarantees based on models can provide a rigorous basis from which to build protected MR systems. Adversary exploitation of security vulnerabilities associated with widespread MR use is a potential technological surprise. Methods for developing protected MR systems will be developed by the ICS program before MR systems lacking protections are pervasive and essential.

Collins Aerospace, RTX Technology Research Center (RTRC), Iowa State University (ISU), Smart Information Flow Technologies (SIFT), and Florida Institute of Technology (FIT) have teamed to address the challenges posed by the DARPA ICS program, which kicked off in July 2024.

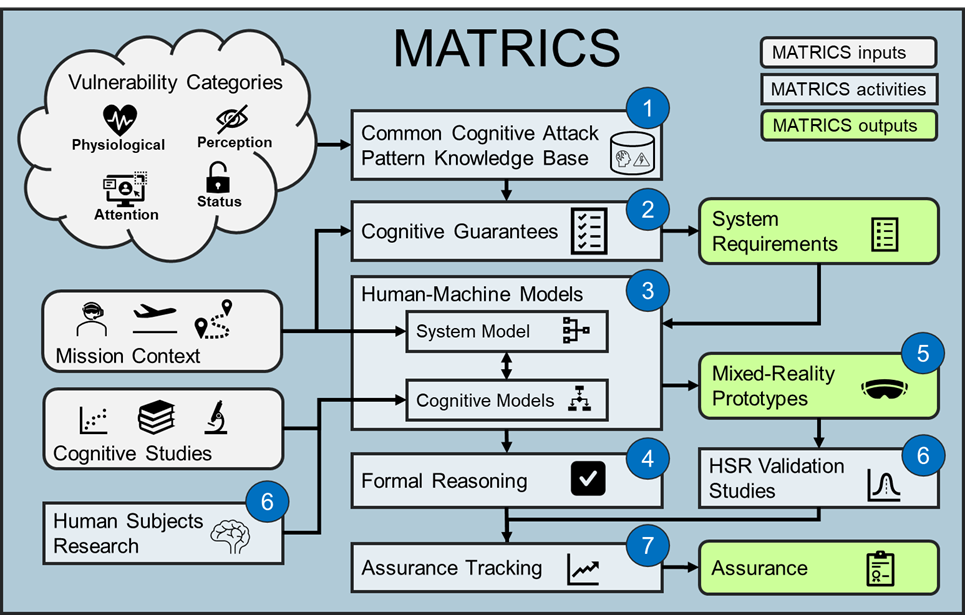

MATRICS will develop methods and tools for designing MR systems and assuring protection from cognitive cyber-attacks. This past decade has witnessed an explosion of model-based engineering and formal analysis techniques geared towards cybersecure high-assurance systems. However, analysis of these systems typically leaves out one key ingredient: the user. Even when aspects of user behavior are included in a system model, an assumption is often made that the user will respond rationally to stimuli and correctly perform specific procedures to achieve mission objectives. In MR systems, the interactions between the user, system, and the environment are so entwined that they cannot be treated separately. They must be modeled and analyzed together to ensure the entire human-machine system is secure against novel classes of MR attacks. Doing so necessarily requires accurate models of human cognitive behavior as well as new formal analysis methods and tools that can provide the rigorous assurance needed before these systems can be safely deployed.

Our technical plan for MATRICS is distinguished by the following characteristics:

- Cognitive Attack Pattern Knowledge Base. Effective protection against cognitive cyber-attacks in MR systems requires an understanding of how an adversary may exploit a vulnerability. Currently, there is no publicly available resource that captures and classifies this information. We will compile a Cognitive Attack and Mitigation Pattern (CAMP) knowledgebase, similar to MITRE’s Common Attack Pattern Enumeration and Classification (CAPEC), but specific to the ICS domain. The CAMP knowledgebase will be built around a classification schema aligned with the MATRICS workflow. We will populate the knowledgebase with cognitive attack patterns corresponding to mission scenarios aligned with each vulnerability category.

- Formal Requirements Languages. Existing requirement formalisms were not designed or evaluated for specifying MR system concepts involving human and machine interaction. We will evaluate and adapt formal requirements languages and tools we previously developed (e.g., AGREE, PRISMATIC, and Resolute), as well as other widely used formalisms, to establish cognitive guarantees. This effort will result in a new collection of novel specification languages with the expressive power required for proving MR designs protect users from four categories of cognitive attack.

- Formal Human-Machine Models. We will generate formal human-machine models from cognitive theories within each category that are relevant to mission scenarios of interest. We will apply innovative computational human-machine modeling techniques to uncover hidden structures in human behaviors for computationally tractable models of human cognition. We will represent cognitive models using non-traditional formalisms such as Markov Decision Process (MDP), state transition systems, and probabilistic automata for expressing timing and probabilistic behaviors of the operator under various cognitive attacks.

- Formal Reasoning for ICS. We will use our team’s unique capabilities in developing novel and useful formal methods tools to demonstrate the feasibility and benefit of analyzing cognitive guarantees to determine whether they permit undesired behaviors, as well as check for logical consistency for identifying conflicts and unrealizable conditions. We will adapt and apply probabilistic modeling and verification frameworks (e.g., PRISMATIC, SMART) to prove cognitive guarantees in MR system designs, as well as enhance compositional assume-guarantee reasoning (AGREE) to support probabilities and uncertainty. In addition, we will enable formal analysis of integrated cognitive, device, and environment models via adapter and translator implementations that support multiple design and analysis frameworks.

- Mixed-Reality Prototypes. We will build MR prototypes to validate cognitive guarantees for each vulnerability category. In addition, we will create interactive scenarios that include 1) real-world hardware with embedded systems with which a user can engage (individual-scale space) or 2) multiple people and objects moving (room-scale space). These scenarios will be integrated into novel Testing and Validation Environments (TVEs), which have a mission scenario at their core, but also integrate systematic parameterization of scenario variables for research purposes and detailed data logging for analysis purposes.

- Human Subjects Research Studies. We will elicit cognitive models from prior human studies conducted by our team. This data is of higher-quality and depth than what is generally available. Where prior data or the literature does not sufficiently address a vulnerability category, we will conduct new human subjects research studies to fill in those gaps. We will also conduct HSR validation studies to determine whether the MR prototypes can defeat hacker efforts via the guarantees. Studies will recruit from DoD-relevant populations, especially focusing on ROTC cadets from ISU’s Army, Air Force, and Navy training programs.

- ICS Assurance. Unlike traditional system cybersecurity, there is a lack of guidance for assuring that MR systems are truly protected from cognitive attack. Formal analysis results alone will not be sufficient. Therefore, we will define formative ICS assurance patterns corresponding to the attack patterns that motivate our mission scenarios. The assurance patterns will include the necessary arguments that a given MR system or operator is protected from attack, as well as the evidence that is required to substantiate the assurance argument.

Tools and models being developed on the MATRICS project are open-source and are available on the Loonwerks GitHub.